PyTorch on Twitter: "We're excited to announce support for GPU-accelerated PyTorch training on Mac! Now you can take advantage of Apple silicon GPUs to perform ML workflows like prototyping and fine-tuning. Learn

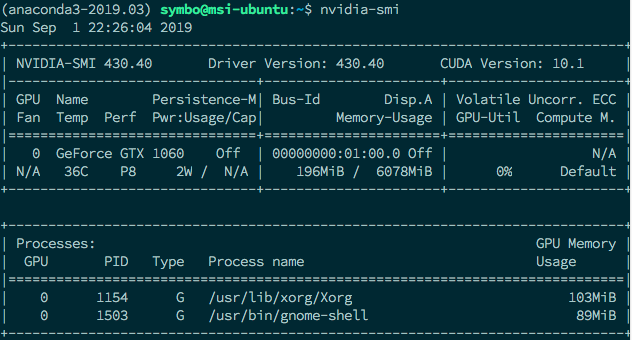

Use GPU in your PyTorch code. Recently I installed my gaming notebook… | by Marvin Wang, Min | AI³ | Theory, Practice, Business | Medium

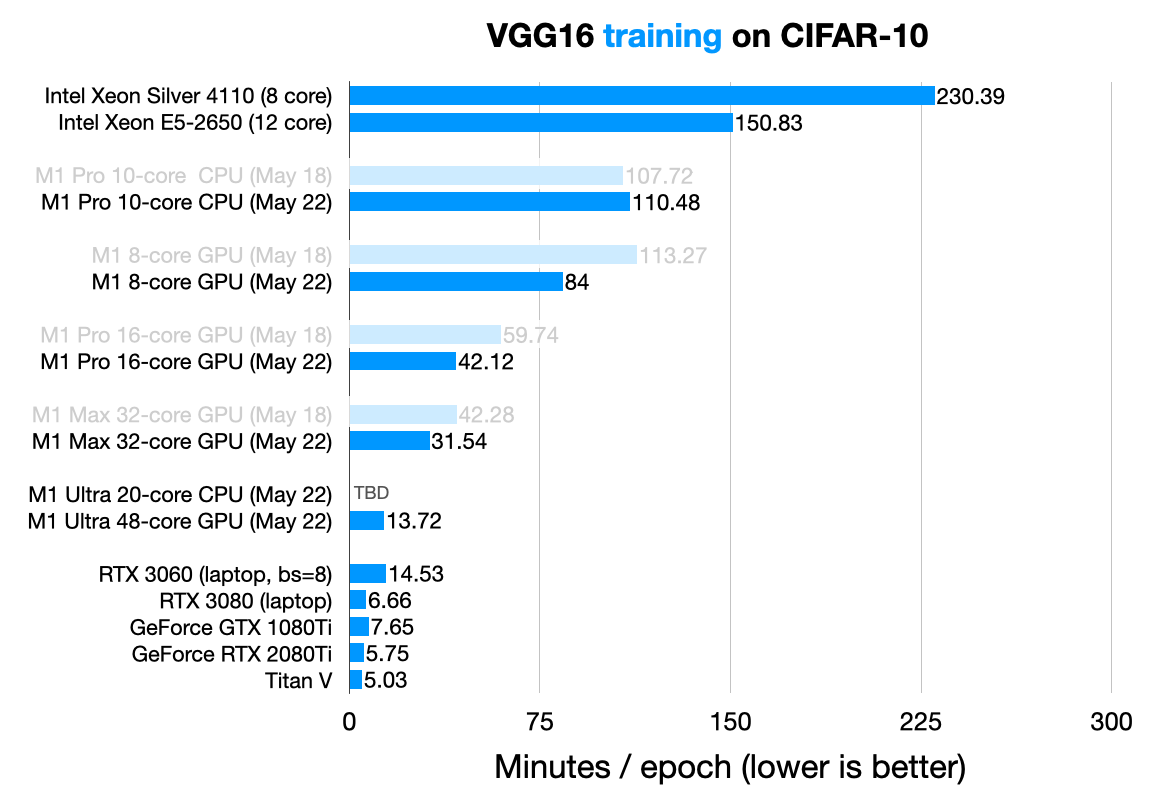

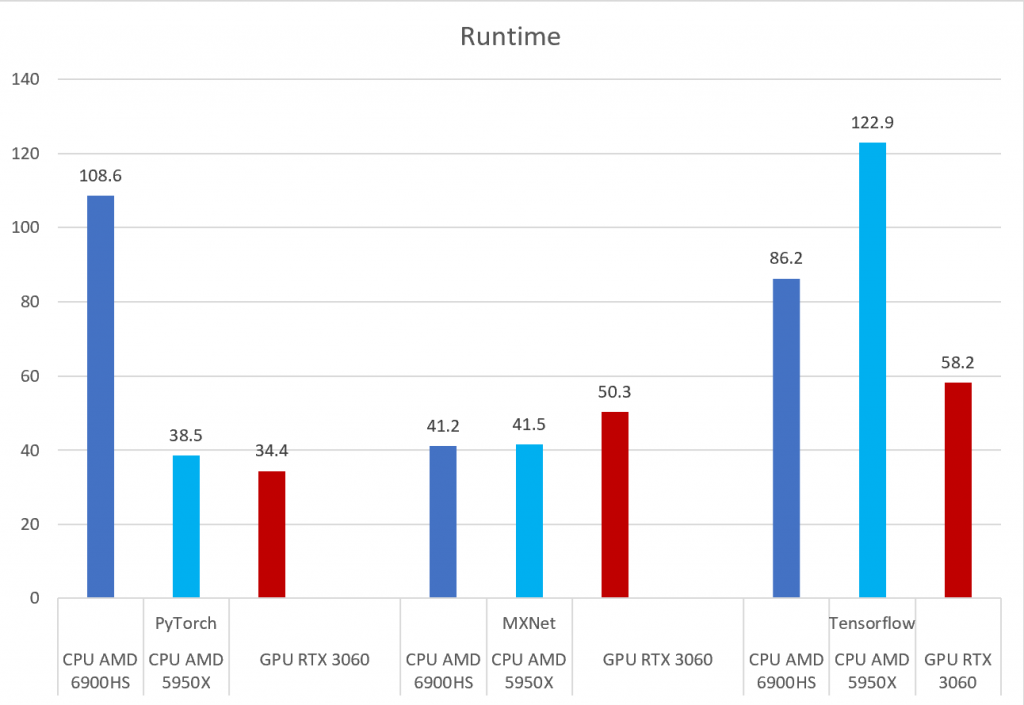

PyTorch, Tensorflow, and MXNet on GPU in the same environment and GPU vs CPU performance – Syllepsis